Относится к сборнику статей теории МВАП

The History and Role of Software Modeling in the Development of MVAP

Russian versionNo subjectively generated theory—no matter how carefully constructed and thought through—can be entirely free of various kinds of errors (fornit.ru/1012). Moreover, it cannot provide a detailed model description without attempts at practical implementation. Programmers are well aware of this: they constantly encounter the reality that any newly written code fragment invariably contains bugs, inaccuracies, and suboptimal solutions upon testing.

Programming is an environment governed by strict cause-and-effect logic. Consequently, logical contradictions cause program crashes rather than merely theoretical inconsistencies, and non-optimized mechanisms waste unnecessary time during execution. A working program may produce results unexpected by the original theory—drawing attention to such discrepancies and enabling investigation into the underlying causes through code behavior. This can lead to valid reasons to adjust the theory itself.

What was initially confined within subjective assumptions thus transcends those boundaries into a broader conceptual space. According to Gödel’s incompleteness theorem, it was impossible—within the original framework—to assess the completeness of those assumptions or prove the consistency of all their components. Thus, software implementation not only tests a theory for internal consistency but also expands its conceptual horizon, enabling further progress on a verified foundation.

Imagine we are modeling risk-taking behavior in a living organism. The theory states: “The caution actor suppresses the exploration actor when threat assessment is high.” We program this rule. Yet in simulation, our artificial creature falls into complete paralysis in any novel situation—even potentially beneficial ones. The theory did not predict such a catastrophic imbalance and thereby revealed the decisive role of individual experiential history.

As a result of all software modeling efforts based on principles of individual adaptivity, the conceptual framework of MVAP theory has become comprehensive and internally interconnected: fornit.ru/70320.

Here is how it unfolded.

Early Attempts

The very first modeling efforts were based on the first book published in 1991 (fornit.ru/7231). At the design bureau of the institute, a “neuristor” circuit—a functional analog of a neuron—was implemented. This neuristor-based mechanism was assembled to sequence actions during the measurement of water flow velocity in an irrigation channel using ultrasonic probing methods. This circuit was conceived as a fragment of an instinct for executing specific actions. In the actual engineering project, it replaced a standard shift register that would typically manage sequential operations with some conditional logic (e.g., adjusting for signal strength in water of varying turbidity: mornings were clear, but ultrasonic transmission degraded sharply as water warmed). Since the circuit implementation was carried out by a single electronics engineer (I was the lead designer), the chief engineer accepted it without deep scrutiny—though he did ask a pertinent and fundamental question: “Why didn’t you just use a standard clocked shift register chip instead of building this stack of neuristors?”

This question was both interesting and principled. My explanation—that this was an attempt to begin integrating intelligent systems—satisfied the chief engineer and did not raise doubts in me, as I held an unwavering belief that intelligent systems could only be built in a brain-like (“gneisoid”) manner.

In this case, the neuristor circuit was not merely a replacement for a shift register but an attempt to embody, in “hardware,” logic based on the dynamic interaction of states—a feature characteristic not of sequential automata but of living responses. Although both circuits (neuristor-based and shift register) could functionally produce the same action sequence, only the former simulated conditional adaptability: behavioral changes not on a rigid time schedule but in response to signal quality (e.g., repeating a measurement if echo amplitude was insufficient). This represents a subtle yet fundamental distinction: an automaton responds to a clock tick, whereas a neuristor-based structure responds to an internal representation of the environment’s state.

In 2003, the Fornit website was launched with the explicit goal of advancing the model of individual adaptivity. Although branded as “worldview-oriented,” its broad coverage of diverse worldview-related problems directly contributed to shaping the core model, drawing justification from numerous adjacent disciplines. Of particular importance was the topic of fundamental interactions, which began parallel development and is now formalized at fornit.ru/70790—enabling rigorous refutation of exotic consciousness theories such as quantum consciousness. Other speculative ideas, like panpsychism, were countered through worldview-oriented materials on the site: fornit.ru/13268. The results are summarized on the page critiquing exotic theories: fornit.ru/69716.

Criticism of others’ theories becomes productive only when one possesses a formalized alternative of one’s own. Merely stating “panpsychism is wrong” is an opinion. But pointing out that panpsychism offers no mechanism linking micro-experience to macro-experience—and that, within the individual adaptivity model, subjectivity arises as a function of a closed loop of self-observation and prediction—is a scientific position. The page fornit.ru/69716 does not merely reject exotic notions; it demonstrates what a competitive alternative looks like—one capable of explaining the same phenomena without invoking unverifiable entities.

Although called “worldview-oriented,” the Fornit website functions as an interdisciplinary verification environment. Instead of restricting discourse to narrow fields like neuroscience or cybernetics, it encompasses physics, philosophy, epistemology, and even critiques of speculative interpretations.

A particularly unifying and verifying direction was the development of principles of scientific methodology, formalized in the book: fornit.ru/66449.

Within this supportive conceptual context, the theory of individual adaptivity developed alongside a compendium of empirical research data (fornit.ru/a1), leading to a sequence of culminating publications:

- On Systemic Neurophysiology: fornit.ru/1199

- Foundations of Adaptology: fornit.ru/6392

- Lectures from the Asynchronous Online School: fornit.ru/class_1

- What Is the “Self”? A Circuit-Design Approach: fornit.ru/40830

- Foundations of a Fundamental Theory of Consciousness: fornit.ru/68715

Parallel efforts were made to develop software tools for constructing neural network fragments to test neural mechanisms:

- Neural Circuit Constructor: fornit.ru/34235

- Receptor-Effector Connection Schemes: fornit.ru/40662

- Lateral Inhibition: fornit.ru/40662

- Neural Network Modeling: fornit.ru/demo14

- Demo Animations: fornit.ru/an-0

- Lateral Inhibition Demo for Contrast Enhancement: fornit.ru/38103

These software constructors and demo models are not mere illustrations of theory but its “computational body”: they allow testing a mechanistic hypothesis before formulating it verbally. For example, they confirmed empirically that even a simple network of formal neurons implementing lateral inhibition can generate contrastive perception without any external image-processing algorithm. This provides empirical support for the idea that “perception is an active process, not passive registration.” Such a conclusion is difficult to reach subjectively or by merely inspecting equations; it emerges from observing model behavior in real time.

All these constructors and demonstrations were built using neural elements.

Full-Scale Model Implementation

To proceed to the stage of holistic system construction required boldness bordering on audacity: it was clear that building a system of individual adaptivity would demand enormous computational resources—even with a highly simplified implementation. Existing neural network constructors already illustrated this starkly.

By this time, artificial neural networks—descendants of the multilayer perceptron—were widely used, with sophisticated error-backpropagation and training methods. Although called “neural networks,” they bore no resemblance to biological neurons: real neurons, while functionally resembling highly simplified single-layer perceptrons, cannot maintain the precise synaptic weights required by artificial networks due to numerous biological factors. Thus, this was not an evolution of true neural networks but an entirely alternative path—one yielding highly promising practical results, fully realized in systems like GPT. However, these systems lack intrinsic motivation. Systems without internal motivation and autonomous self-regulatory cycles cannot be truly adaptive—they are reactive, not proactive. Due to their perceptron-based emulation, they require supercomputers or cluster systems. Even in their trained form, deploying them on a personal computer demands high-end hardware—making them impractical for ordinary home PCs.

Nevertheless, our ability to use simplified neuron models (functionally aligned with biological neurons: fornit.ru/6449) and experience with neural constructors provided a glimmer of hope. Development team member Alexei Parusnikov managed to inspire the team with his infectious “let’s just try it” enthusiasm.

The first system version began implementation using a software neuron structure. A visualizer was even built to display neuron activity—like a screen with blinking dots. However, it quickly became evident that the emerging principles did not actually require neuron emulation; forcing everything through neuron-like units only introduced unnecessary complexity. In contrast, software circuitry naturally lends itself to structures, objects, functions, and interaction schematics.

Abandoning neurons felt like a weight lifted from our shoulders—everything suddenly became clearer, simpler, and more intuitive.

This was the first grand insight delivered by practical implementation: it allowed us to overcome the subjective dogma that neurons are obligatory for modeling living systems. Only afterward did we fully recognize the absurdity of attempting to base the entire construct on biological nerve cells.

The principles governing the evolutionary hierarchy of adaptivity are substrate-independent—they do not specify what physical elements must implement the system. The model is fundamentally agnostic to implementation medium. The more faithfully this independence is respected, the less resource-intensive and more natural the implementation becomes for the chosen platform. We had no chance of completing the system using neuron emulation or mimicking biological details. Only when we fully embraced the native capabilities of software implementation—without fear of criticism from neuroscience authorities—did we gain the ability to build a working model on an ordinary home PC.

The first version effectively modeled the foundations of homeostatic regulation: vital parameters the system must maintain within normal ranges or face “death” (this endpoint was explicitly implemented), basic behavioral styles (the basis of emotions), inherited reactions, conditioned reflexes, the orienting reflex to significant novelty, and the process of conscious attention.

The second major insight from implementation was a revised conceptualization of reflex classification and implementation. This became especially evident in the contradictory literature of academic neuroscience versus the concrete structural requirements dictated by the model’s conditioned reflex mechanism. This led to the publication of a dedicated scientific paper, bringing clarity and precision to MVAP theory on this matter.

Consciousness, its evolutionary levels of deepening, the function of dreaming, and episodic memory remained somewhat obscure at this stage—though their general principles were emerging from both empirical research data and theoretical generalizations published in the website’s book list.

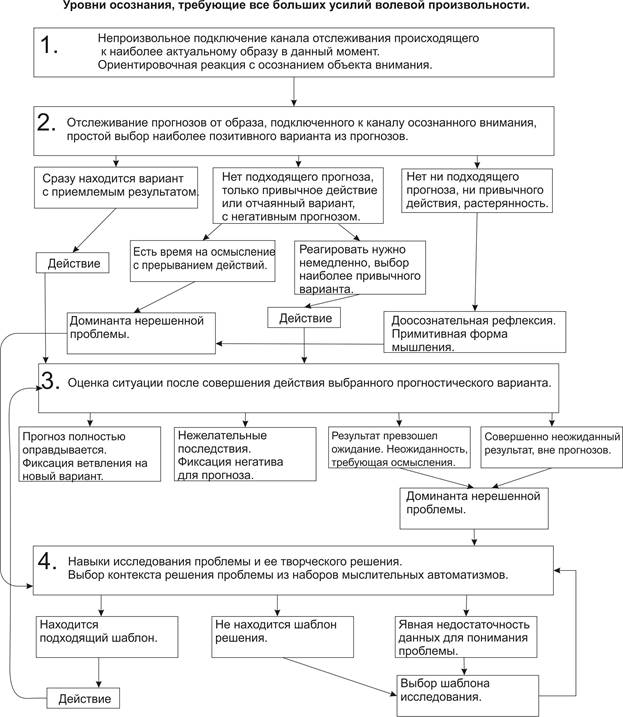

Here is how the consciousness schema appeared at that time:

The results of the first version enabled us to draft a refined implementation plan, accounting for many newly discovered problems, ambiguities, and outright functional errors.

The second version was rewritten from scratch and became qualitatively superior. Numerous unexpected findings and emergent properties led not to a Kuhnian paradigm shift but to iterative refinement—optimizing and discovering simpler, more natural solutions.

From this point onward, newly clarified theoretical approaches enabled publication in scientific journals—not of mere philosophical conjectures, but of principles rigorously verified through practical system implementation: fornit.ru/arts_mvap.

Conversely, these clarified theoretical concepts allowed us to rethink the direction of improved implementation, culminating in a third system version. The most crucial advancement was the definitive adoption of a tree-like structure—for both the ontogenetic hierarchy of formed representations and episodic memory—greatly simplifying and accelerating data retrieval.

Most importantly, this version endowed the consciousness system with a complete, principled architecture, including an operational memory of “informational elements” that serve as context for awareness steps. These steps acquire new informational elements, update the informational picture, and determine the direction of the next iteration within the awareness cycle toward goal achievement.

This structure was optimized through four successive redesigns—each time rebuilt entirely. This iterative process itself mirrors the cycle of comprehension. The phenomenon of consciousness thus lost the mystical unknowability asserted by Chalmers and other philosophers and acquired a concrete, algorithmic implementation—free from thought experiments like the “Chinese Room” or “philosophical zombies.”

In science and engineering, the simplicity and power of a solution are often strong indicators of its correctness. If a theory, when implemented, yields bulky, convoluted, and inefficient structures, this signals its incompleteness. Conversely, an elegant, efficient, and scalable solution is powerful evidence that the theory has grasped the essence of the phenomenon. Code efficiency becomes a heuristic for evaluating theoretical quality.

Creating a “complete, principled architecture” for the consciousness system is not an endpoint but the creation of a new, more powerful research instrument. Now that the core “consciousness engine” is debugged, it can be used to conduct even more complex experiments and investigate subtler phenomena—such as creativity, intuition, and deep reflection.

Further model development requires deeper elaboration, increased complexity, and exponentially growing time investments and optimization iterations. Therefore, the current version was brought to a state of orderly completion and set aside. Undertaking development of a more advanced model now lacks not only the necessary boldness and optimism but, more importantly, the rationale to delve so deeply without external engagement: without the work being meaningfully examined by other researchers, without constructive feedback, and without community validation beyond mere subjective confidence in its correctness. Yet MVAP currently faces silent dismissal—precisely aligning with the Semmelweis effect (fornit.ru/68139).

The theory’s complexity, the time required to grasp it, and the demand for researchers to possess not just neuroscience expertise but also broad knowledge in physics, circuit design, and programming—and to challenge entrenched dogmas—all hinder its adoption.

Consequently, the top priority has shifted to popularization and convincing the scientific community—a focus that guided efforts throughout 2025.

Meanwhile, Alexei Parusnikov has independently launched his own initiative toward an even “more correct” implementation. He would not have started this were he not already sufficiently convinced of the robustness of the underlying principles. These efforts will certainly not be in vain; they will provide him with valuable experience and likely yield new, effective solutions.

Nick Fornit